This blog has moved. This is reposted from Paired Ends:

https://blog.stephenturner.us/p/create-a-free-llama-405b-llm-chatbot-github-repo-huggingface

Llama 3.1 405B is the first open-source LLM on par with frontier models GPT-4o and Claude 3.5 Sonnet. I’ve been running the 70B model locally for a while now using Ollama + Open WebUI, but you’re not going to run the 405B model on your MacBook.

Here I demonstrate how to create and deploy a Llama 3.1 405B-powered chatbot on any GitHub repo in <1 minute on HuggingFace Assistants, using an R package as an example

Create and deploy a HuggingFace Assistant

I’m going to use the tfboot R package as an example here (paper, GitHub). I wrote the tfboot package to provide methods for bootstrapping transcription factor binding site disruption to statistically quantify the impact across gene sets of interest compared to an empirical null distribution. The package is meant to integrate with Bioconductor data structures and workflows on the front end, and Tidyverse-friendly tools on the back end. You can read more about the package in the paper.

The 42-second video below demonstrates how to create & deploy your chatbot.

- First, go to HuggingFace Assistants (https://huggingface.co/chat/assistants) and click Create new assistant.

- Fill in some details. Give your chatbot a name and description, and a system prompt (“You are a chatbot that answers questions about the tfboot codebase”).

- Select meta-llama/Meta-Llama-3.1-405B-Instruct-FP8 as your model.

- Fill in some example prompts, like “what does this package do?” or “how do I do X, Y, or Z with this tool?

- Now, the important part. Under internet access, select “Specific Links” and provide the URL to the GitHub Repo.

- Hit create, then activate. You’re done.

Demo with the tfboot R package

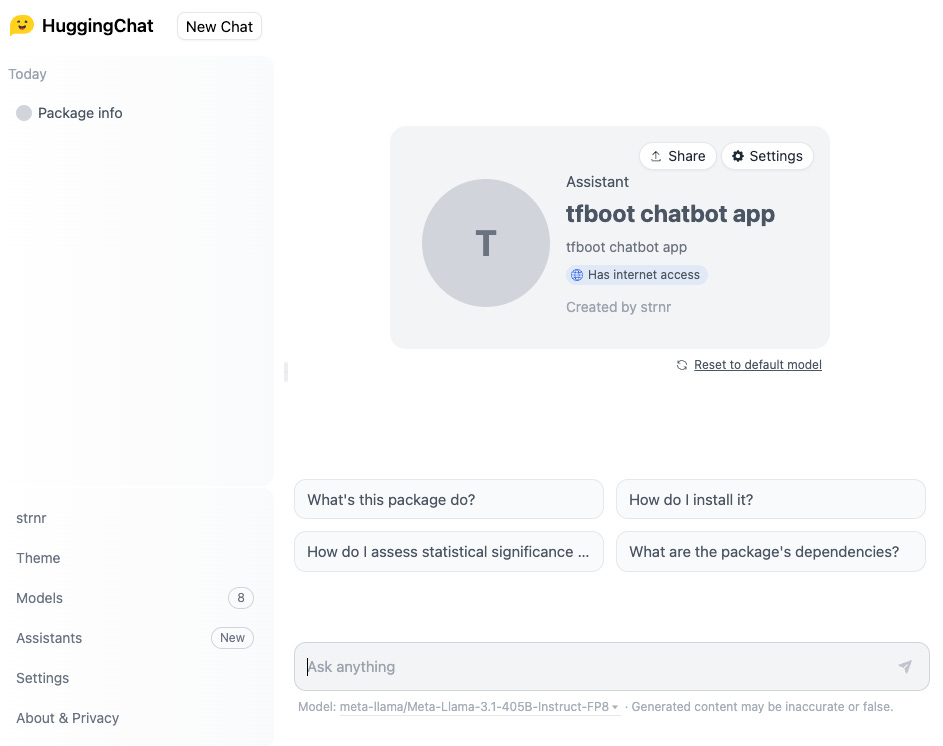

Once you create and activate your model, you’ll see an interface that will look familiar if you’ve ever used ChatGPT or similar LLMs.

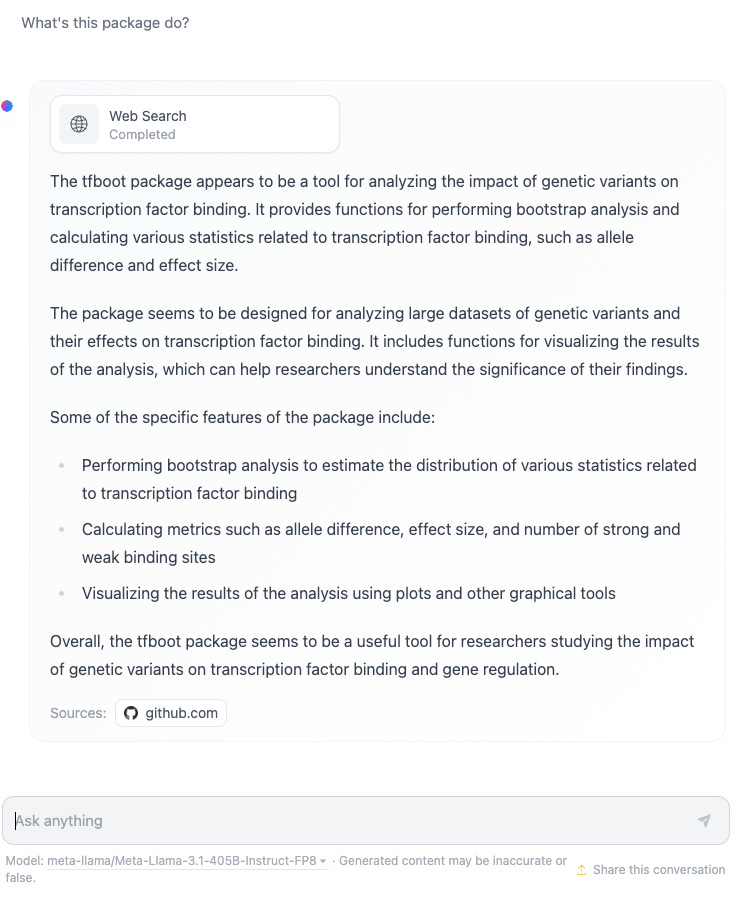

From here you can click one of the example prompts, or type your own prompt. Let’s give it a try. First, a softball pitch. What does this package do? This should be fairly obvious from the README.

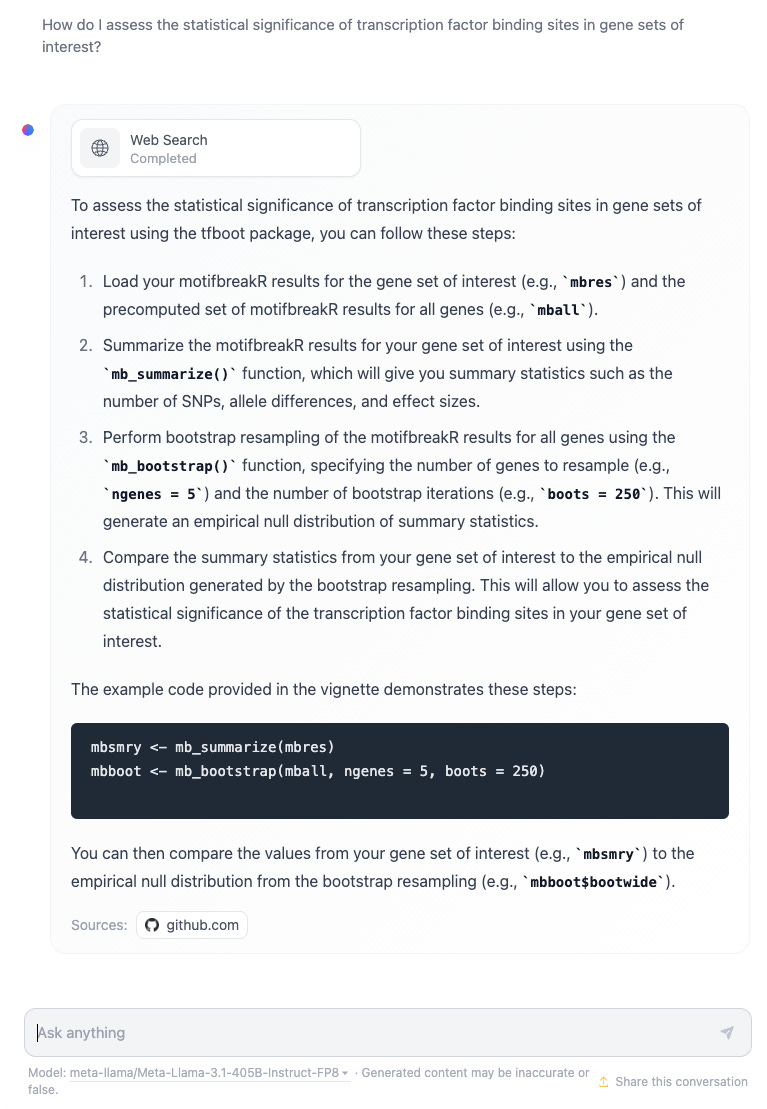

Next, let’s get a little basic info on usage. The chatbot looks through the package’s RMarkdown vignettes and pulls out a high-level protocol on how to run the analysis. There wasn’t much context on what motifbreakR is or what you have to do upstream of running tfboot, but further prompting can help with this.

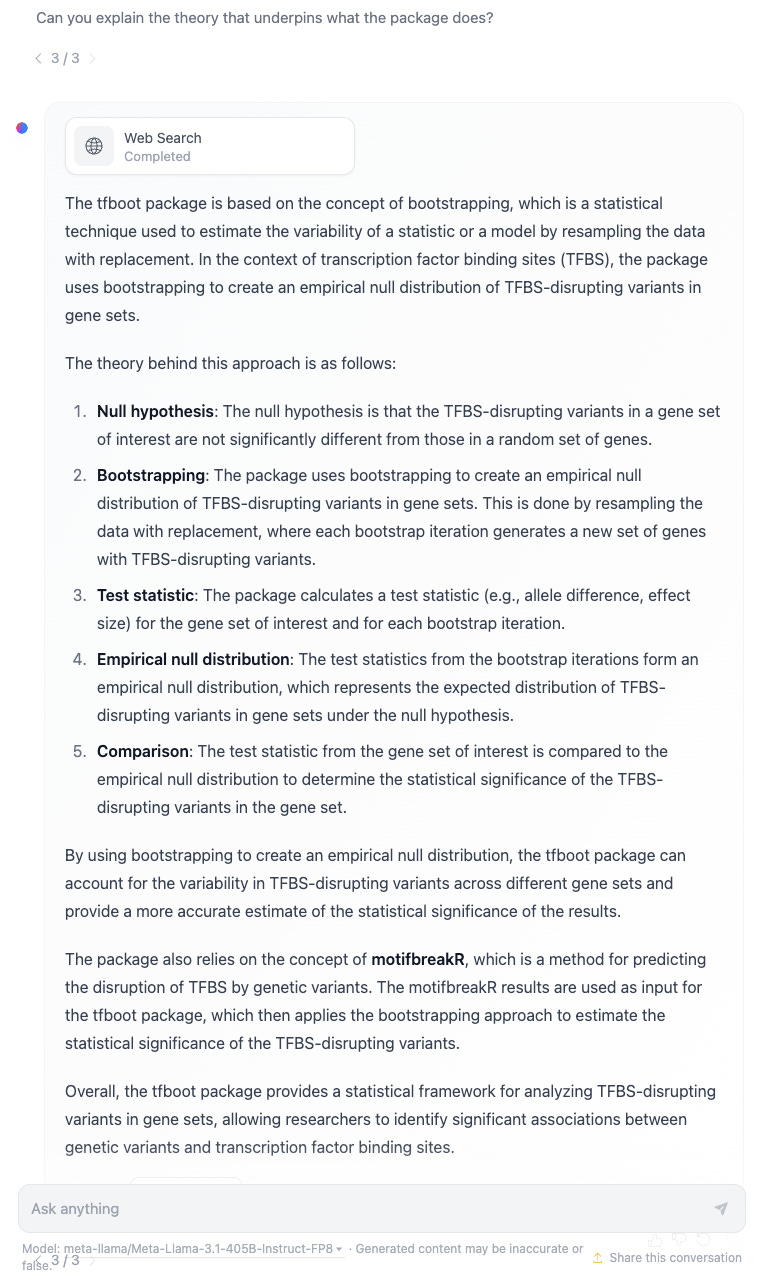

Finally, let’s see what it can tell us about the statistical underpinnings of what the package is doing? Of note, this isn’t simply regurgitation of what’s in the package documentation or vignettes. It’s using a combination of the code and documentation itself and integrating that with general information about bootstrapping, null hypothesis significance testing, and transcription factor binding site disruption analysis.

Keep in mind that any assistant you create will be public. You can play around with the tfboot chatbot here. Also, know that the 405B model is extremely resource intensive. A few times the bot would timeout and I’d have to retry the prompt. This happens far less often with the 70B model, and the response times are faster. You might experiment around and see for yourself where the speed/accuracy sweet spot is for your specific needs.